spark-shell脚本

1 | [double_happy@hadoop101 bin]$ cat spark-shell |

1 | 1. cygwin=false //windows 电脑操作linux东西 要安装cygwin |

1 | [double_happy@hadoop101 bin]$ uname -r //内核的版本 |

case in

1 | case $变量名 in |

1 | [double_happy@hadoop101 script]$ cat test.sh |

1 | [double_happy@hadoop101 script]$ cat case.sh |

if -z

shell 参数

if [ -z “${SPARK_HOME}” ]; then

source “$(dirname “$0”)”/find-spark-home

fi

1 | [ -z STRING ] “STRING” 的长度为零则为真。 |

1 | [double_happy@hadoop101 script]$ cat dirname.sh |

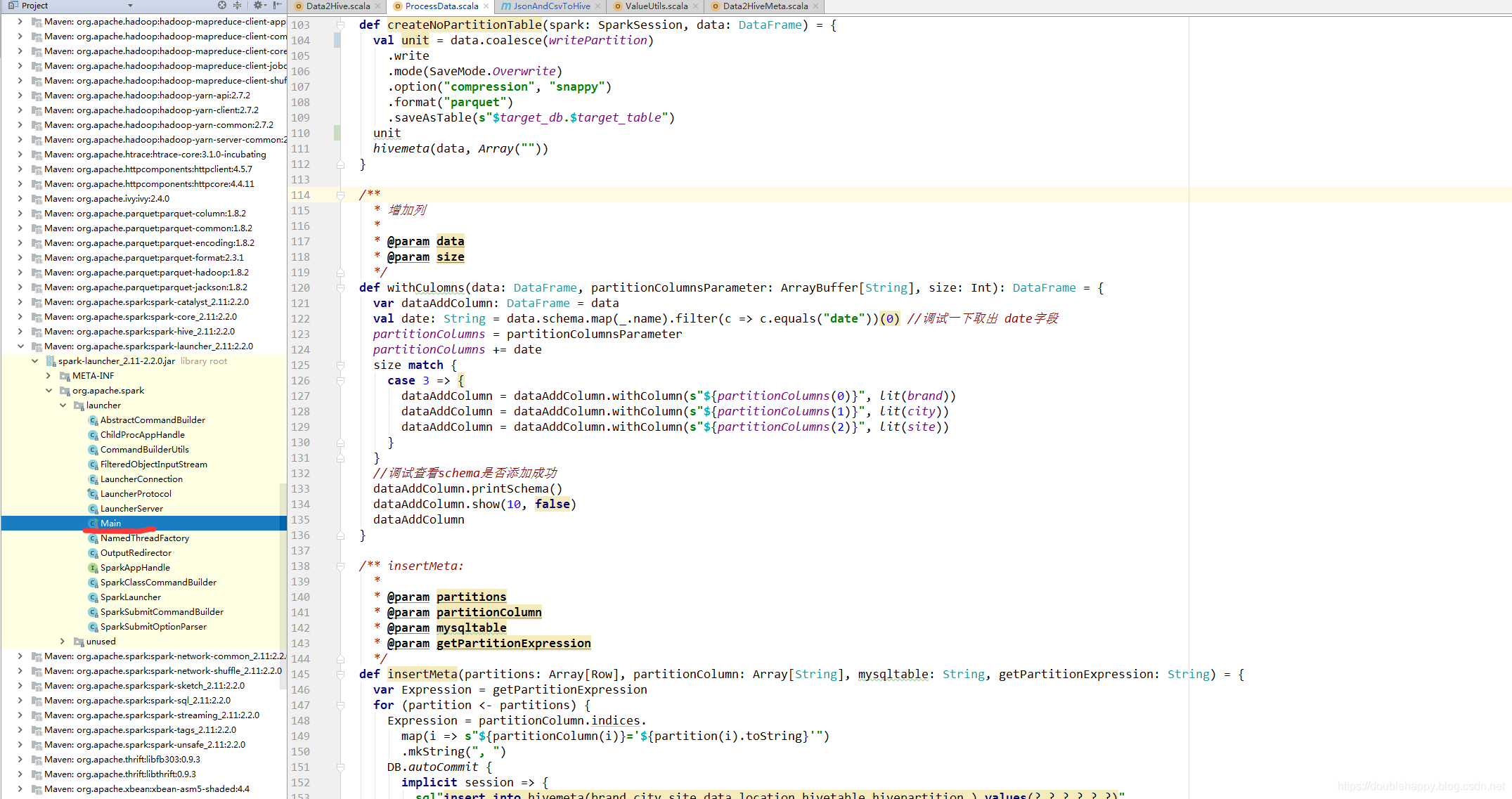

main

1 | main "$@" main方法里 : |

$@

1 | [double_happy@hadoop101 script]$ cat main.sh |

1 | [double_happy@hadoop101 script]$ cat main.sh |

spark-submit脚本

1 | [double_happy@hadoop101 bin]$ cat spark-submit |

1 | exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@" |

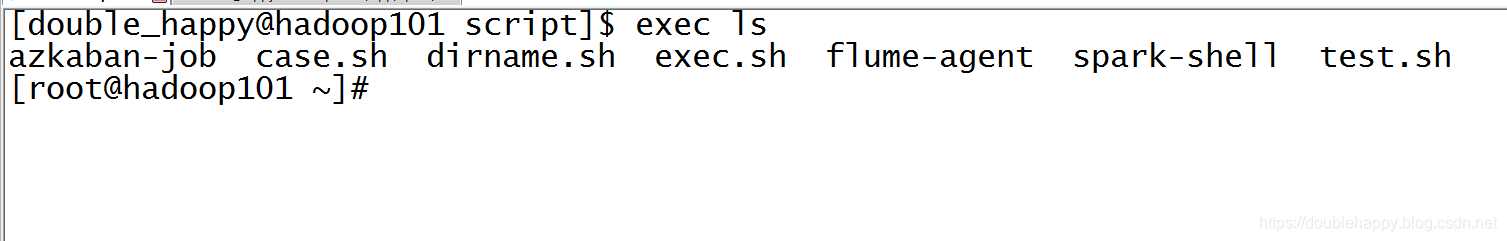

直接退出了

1 | [double_happy@hadoop101 script]$ cat exec.sh |

spark-class脚本

1 | [double_happy@hadoop101 bin]$ cat spark-class |

1 | . "${SPARK_HOME}"/bin/load-spark-env.sh 这是在做什么? |

1 | build_command() { |

整个spark-shell流程:

1 | spark-shell { |